Lerman 64-KNC Cluster

Lerman has 16 nodes, which have 4 Intel Xeon Phi Knights Corner Coprocessors. Nodes are connected with Infiniband QDR high-speed interconnect.

Lerman has 16 nodes, which have 4 Intel Xeon Phi Knights Corner Coprocessors. Nodes are connected with Infiniband QDR high-speed interconnect.

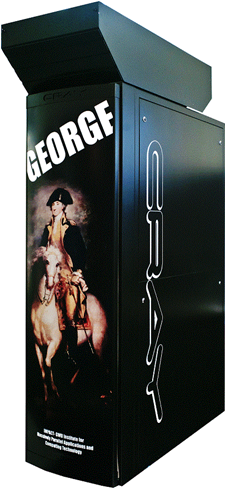

Cray XK7 Supercomputer (George)

George Highlights: (1) 1856 AMD processor cores (2) Around 50 TFLOPS (3) 2.4 TB memory (4) State-of-the art Gemini 2D torus interconnect (5) 32 latest NVIDIA Kepler K20 GPUs

George Highlights: (1) 1856 AMD processor cores (2) Around 50 TFLOPS (3) 2.4 TB memory (4) State-of-the art Gemini 2D torus interconnect (5) 32 latest NVIDIA Kepler K20 GPUs

Pyramid 1048-Core Cluster

Pyramid is composed of 131 Sun X2200 M2 X64 servers, dual socket quad-core Opteron 2.2 GHz with 8GB of RAM; for a total of 1048 cores. The cluster can deliver a theoritical peak of 9.2 TFLOPS. The nodes are interconnected with stackable GigE switches.

Pyramid is composed of 131 Sun X2200 M2 X64 servers, dual socket quad-core Opteron 2.2 GHz with 8GB of RAM; for a total of 1048 cores. The cluster can deliver a theoritical peak of 9.2 TFLOPS. The nodes are interconnected with stackable GigE switches.

SGI RC100

The SGI RC100 Platform consists of 3 CPU blades each with an Intel Itanium2 1.6Ghz and 3 RASC Blades each populated with 2 Virtex 4 LX200 FGPAs and 80MB of QDR memory. The blades are interconnected via dual NUMALink 4 ports providing a 12.8GB/s bandwidth.

The SGI RC100 Platform consists of 3 CPU blades each with an Intel Itanium2 1.6Ghz and 3 RASC Blades each populated with 2 Virtex 4 LX200 FGPAs and 80MB of QDR memory. The blades are interconnected via dual NUMALink 4 ports providing a 12.8GB/s bandwidth.

Cray XD1

The Cray XD1 Platform consists of 6 blades, each populated with 2 Opteron 250 (2.4Ghz) processors and 8 gigabytes of RAM; 6 Application FGPA duaghter cards each populated with a Xilix Virtex II Pro 50 FPGA with 4 banks of 8MB QDR SRAM; and a Cray RapidArray fabric switch with an aggregate bandwidth of 48GB/s.

The Cray XD1 Platform consists of 6 blades, each populated with 2 Opteron 250 (2.4Ghz) processors and 8 gigabytes of RAM; 6 Application FGPA duaghter cards each populated with a Xilix Virtex II Pro 50 FPGA with 4 banks of 8MB QDR SRAM; and a Cray RapidArray fabric switch with an aggregate bandwidth of 48GB/s.

GPU Cluster

The GPU cluster is a heterogeneous multicore platform, it has 12 computing nodes and 1 head node. 4 of the computing nodes are equipped with 2X quadcore Intel Xeon E5520@2.27GHZ(Nehalem), 24GB of memory for each node and 2X Nvidia Tesla S1070 GPU Cards. The other 8 computing nodes are equipped with 2X quadcore Intel Xeon E5520@2.27GHZ(Nehalem), 12GB of memory for each node and 1X Nvidia Tesla C1060 GPU Card connected to each node. The head node is equipped with 2X quadcore Intel Xeon E5520@2.27GHZ(Nehalem), 24GB of memory and 16TB storage space. All of the nodes are interconnected using QDR Infiniband.

The GPU cluster is a heterogeneous multicore platform, it has 12 computing nodes and 1 head node. 4 of the computing nodes are equipped with 2X quadcore Intel Xeon E5520@2.27GHZ(Nehalem), 24GB of memory for each node and 2X Nvidia Tesla S1070 GPU Cards. The other 8 computing nodes are equipped with 2X quadcore Intel Xeon E5520@2.27GHZ(Nehalem), 12GB of memory for each node and 1X Nvidia Tesla C1060 GPU Card connected to each node. The head node is equipped with 2X quadcore Intel Xeon E5520@2.27GHZ(Nehalem), 24GB of memory and 16TB storage space. All of the nodes are interconnected using QDR Infiniband.

PS3 Cluster

The PS3 Cluster consists of eight PS3 Consoles each with an IBM CellBE processor of the following specs: one 2-way SMT PowerPC core (PPE), 3.2GHz, 32kB L1-I cache, 32kB L1-D cache, 512kB locking L2 cache, 256MB XDR DRAM; six SPEs (seventh is used by Sony virtualization software, eigth is hardware disabled in Sony PS3 version of CellBE), each at 3.2GHz w/ VMX vector unit, 128 SIMD GPRs, 256kB SRAM; 218 GFLOPS of total floating point performance (for 8 SPEs, that is); 25.6GB/sec memory bandwidth (per interface to SPEs and XDR RAM.

The PS3 Cluster consists of eight PS3 Consoles each with an IBM CellBE processor of the following specs: one 2-way SMT PowerPC core (PPE), 3.2GHz, 32kB L1-I cache, 32kB L1-D cache, 512kB locking L2 cache, 256MB XDR DRAM; six SPEs (seventh is used by Sony virtualization software, eigth is hardware disabled in Sony PS3 version of CellBE), each at 3.2GHz w/ VMX vector unit, 128 SIMD GPRs, 256kB SRAM; 218 GFLOPS of total floating point performance (for 8 SPEs, that is); 25.6GB/sec memory bandwidth (per interface to SPEs and XDR RAM.

SRC6-HiBar

The SRC6 with Hi-Bar platform consists of a very highspeed crossbar switch (Hi-Bar); 2 memory chassis each containing 8GB of memory; 4 Multi Adaptive Processor (MAP) boards each containing 2 user programmable Xilinx VIrtex II 6000 FPGAs, 6 banks of 4MB DDR SRAM; 2 CPU chassis each containing a SNAP board, two 2.8Ghz Xeon processors, and 1GB of DDR SDRAM.

The SRC6 with Hi-Bar platform consists of a very highspeed crossbar switch (Hi-Bar); 2 memory chassis each containing 8GB of memory; 4 Multi Adaptive Processor (MAP) boards each containing 2 user programmable Xilinx VIrtex II 6000 FPGAs, 6 banks of 4MB DDR SRAM; 2 CPU chassis each containing a SNAP board, two 2.8Ghz Xeon processors, and 1GB of DDR SDRAM.

SRC6e

The SRC6e platform consists of 2 Multi Adaptive Processor (MAP) boards each containing 2 user programmable Xilinx VIrtex II 6000 FPGAs, 6 banks of 4MB DDR SRAM; 2 CPU chassis each containing a SNAP board, two 1Ghz Pentium III processors, and 1GB of DDR SDRAM.

The SRC6e platform consists of 2 Multi Adaptive Processor (MAP) boards each containing 2 user programmable Xilinx VIrtex II 6000 FPGAs, 6 banks of 4MB DDR SRAM; 2 CPU chassis each containing a SNAP board, two 1Ghz Pentium III processors, and 1GB of DDR SDRAM.

SGI Altix - RASC

The SGI Altix-RASC platform consists of an Altix 350 chassis with two 1.4Ghz Itanium 2 processors, 4GB of DDR2 SDRAM; and a RASC chassis containing a Xilinx Virtex II 6000 FPGA with 3 banks of 4MB QDR SRAM.

The SGI Altix-RASC platform consists of an Altix 350 chassis with two 1.4Ghz Itanium 2 processors, 4GB of DDR2 SDRAM; and a RASC chassis containing a Xilinx Virtex II 6000 FPGA with 3 banks of 4MB QDR SRAM.

Starbridge HC-36M

The Starbridge HC-36M (HyperComputer 36Million Gate) consists of two 2.4GHz Xeon Processors, 4GB of DDR SDRAM, an HC Quad PCI-X daughther card consisting of 4 user programmable Xilinx Virtex || 6000 FPGAs each with 2GB of DDR2 SDRAM.

The Starbridge HC-36M (HyperComputer 36Million Gate) consists of two 2.4GHz Xeon Processors, 4GB of DDR SDRAM, an HC Quad PCI-X daughther card consisting of 4 user programmable Xilinx Virtex || 6000 FPGAs each with 2GB of DDR2 SDRAM.

Lyra Cluster

The Lyra Cluster consists of 9 CPU nodes including the head node. Each node contains dual socket, quad-core Intel Xeon E5310 @ 1.60GHz, 4GB DDR SDRAM, and a Myrinet NIC. The chassis are all interconnected via a high speed Myrinet switch which provides a 2.6us MPI latency and a sustained 2Gb/s communication bandwidth.

The Lyra Cluster consists of 9 CPU nodes including the head node. Each node contains dual socket, quad-core Intel Xeon E5310 @ 1.60GHz, 4GB DDR SDRAM, and a Myrinet NIC. The chassis are all interconnected via a high speed Myrinet switch which provides a 2.6us MPI latency and a sustained 2Gb/s communication bandwidth.

SGI Origin 2000

The SGI Origin 2000 platform consists of 32 SGI R10000 processors and 16GB of SDRAM interconnected with a highspeed cache coherant Non Uniform Memory Access (ccNUMA) link in a hypercube architecture.

The SGI Origin 2000 platform consists of 32 SGI R10000 processors and 16GB of SDRAM interconnected with a highspeed cache coherant Non Uniform Memory Access (ccNUMA) link in a hypercube architecture.